Change Tracking has a similar name to Change Data Capture and has caused some minor confusion. Change Tracking, however, is entirely different and serves a separate purpose.

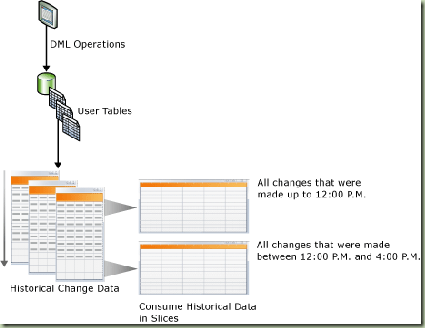

CDC is an asynchronous process that captures row level changes and stores them in special change tables. This information is available as relational data and can be queried by client applications.

Change Tracking is synchronous and tracks data changes but stores only the fact that they were changed and the last value for the row. Both one-way and two-way synchronization is supported, but remember that with two-way conflict detection is supported but the client is responsible for handling it.

Change Tracking uses tracking tables to store the primary key of the modified rows along with version numbers. It is easy to detect version conflicts.

- An application requests the version number for a row it intends to modify.

- If the version has changed since the last request, there is a conflict.

- Solving the conflict is up to the requesting application.

In order to work with Change Tracking there are a few steps to do.

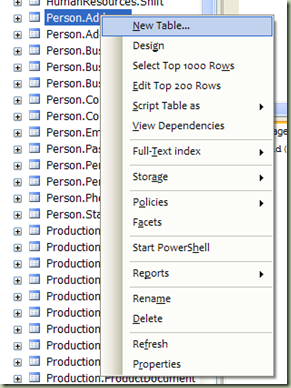

- Enable Change Tracking at the database level.

- Enable Change Tracking at the table level.

- Use CHANGE_TRACKING_MIN_VALID_VERSION function to get the minimum version, which is an integer. This value can be used to get the changes for a specific table.

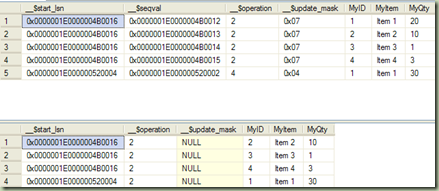

- Use CHANGETABLE function to get information , such as changes, types of changes, columns that changed, etc.

- Use CHANGE_TRACKING_CURRENT_VERSION function to get the current version. This is set by the last transaction committed in the database.

- Use the WITH CHANGE_TRACKING_CONTEXT() hint to specify a context for data modifications. This allows data modifications to be grouped according to client or application.

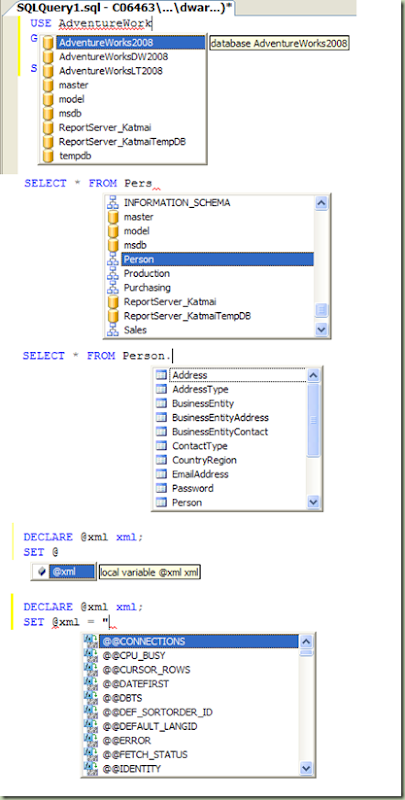

Let's look at some code that will show some of the basics.

USE master;

GO

--Create a test database if necessary

IF NOT EXISTS(SELECT * FROM sys.databases WHERE [name]=N'MyDB')

CREATE DATABASE MyDB;

GO

--Configure the database to allow change tracking

IF NOT EXISTS(SELECT * FROM sys.change_tracking_databases

WHERE database_id = (SELECT database_id FROM sys.databases WHERE [name]=N'MyDB'))

ALTER DATABASE MyDB SET CHANGE_TRACKING = ON

(CHANGE_RETENTION = 2 DAYS, AUTO_CLEANUP = ON);

GO

--Configure the database to use snapshot isolation

ALTER DATABASE MyDB SET ALLOW_SNAPSHOT_ISOLATION ON;

GO

USE MyDB;

GO

--Create a test table

IF NOT EXISTS(SELECT * FROM sys.tables WHERE [name]=N'testTbl')

CREATE TABLE testTbl(testID INT IDENTITY(1,1) PRIMARY KEY

NOT NULL, VAL VARCHAR(64));

GO

--Enable change tracking on the table

IF NOT EXISTS(SELECT * FROM sys.change_tracking_tables WHERE [object_id] = OBJECT_ID(N'testTbl'))

ALTER TABLE testTbl ENABLE CHANGE_TRACKING;

GO

--Examples of using the Change Tracking functions.

SELECT CHANGE_TRACKING_CURRENT_VERSION();

SELECT CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'));INSERT INTO testTbl(VAL) VALUES('V01');

SELECT CHANGE_TRACKING_CURRENT_VERSION();

SELECT CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'));

INSERT INTO testTbl(VAL) VALUES('V02'),('V03'),('V04');

SELECT CHANGE_TRACKING_CURRENT_VERSION();

SELECT CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'));

The example code above shows the initial values for Change Tracking as well as the changes when you update a row's data. To use Change Tracking you need to reconnect to check that you have a valid version of the data before you process it. Below shows the one-way synchronization scenario.

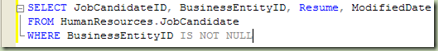

--This shows the one way sync scenario SET TRANSACTION ISOLATION LEVEL SNAPSHOT; DECLARE @Last_sync_version INT = 0; DECLARE @sync_version INT; --Use transaction for consistency BEGIN TRANSACTION --Make sure the last_sync_version is valid IF (@Last_sync_version < CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'))) RAISERROR(N'Invalid value', 10, 1); ELSE BEGIN --Get the current for the next sync SET @sync_version = CHANGE_TRACKING_CURRENT_VERSION(); --Show the current tracking version and change version --for each row. SELECT testTbl.testID, testTbl.VAL, CT.SYS_CHANGE_VERSION CV, CT.SYS_CHANGE_CREATION_VERSION CCV, CT.SYS_CHANGE_OPERATION CO, CT.SYS_CHANGE_COLUMNS CC, CT.SYS_CHANGE_CONTEXT CContext FROM CHANGETABLE(CHANGES testTbl, @Last_sync_version) AS CT LEFT JOIN testTbl ON testTbl.testID = CT.testID; SELECT @sync_version; END COMMIT TRANSACTION GO --Show another session updating the data UPDATE testTbl SET VAL='New V01' WHERE testID = 1; INSERT INTO testTbl(VAL) VALUES('V05'); DELETE FROM testTbl WHERE testID = 3; --The initial session reconnects to get --changes SET TRANSACTION ISOLATION LEVEL SNAPSHOT; DECLARE @Last_sync_version INT = 2; DECLARE @sync_version INT; BEGIN TRANSACTION --Check that you have a valid version IF(@Last_sync_version < CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'))) RAISERROR(N'Invalid value', 10, 1); ELSE BEGIN SET @sync_version = CHANGE_TRACKING_CURRENT_VERSION(); SELECT testTbl.testID, testTbl.VAL, CT.SYS_CHANGE_VERSION CV, CT.SYS_CHANGE_CREATION_VERSION CCV, CT.SYS_CHANGE_OPERATION CO, CT.SYS_CHANGE_COLUMNS CC, CT.SYS_CHANGE_CONTEXT CContext FROM CHANGETABLE(CHANGES testTbl, @Last_sync_version) AS CT LEFT JOIN testTbl ON testID.Tbl.MyID = CT.MyID; SELECT @sync_version; END COMMIT TRANSACTION GO

There is also two-way synchronization, which is shown below. In the scenario shown, an attempt is made to update the first row. If it can't update the row, the execution path follows the conflict resolution code. This example only uses a table with 2 columns, but in real-world scenarios a table will have far more columns, some of which may have been updated. In this situation, if you are updating columns in a table that haven't been updated, you may choose to proceed.

--Two-way synchronization scenario. --Assumes the last sync occurred when the sync value was 2 SET TRANSACTION ISOLATION LEVEL SNAPSHOT; DECLARE @Last_sync_version INT = 2; DECLARE @sync_version INT; DECLARE @current_row_version INT; --Find the current row version for testID=1 SELECT @current_row_version = ISNULL((SELECT CT.SYS_CHANGE_VERSION FROM CHANGETABLE(VERSION testTbl, (testID), (1)) CT),0) SELECT @current_row_version; BEGIN TRANSACTION IF (@Last_sync_version < CHANGE_TRACKING_MIN_VALID_VERSION(OBJECT_ID(N'testTbl'))) RAISERROR(N'Invalid value',10,1); ELSE BEGIN --Attempt to update the 1st row UPDATE testTbl SET VAL = 'NewAppValue' FROM testTbl WHERE testID = 1 AND @Last_sync_version >= @current_row_version; END IF (@@ROWCOUNT = 0) BEGIN --A conflict is indicated by the execution path (@@ROWCOUNT = 0) --Gather more information to see if the VAL column has been updated. IF EXISTS(SELECT CHANGE_TRACKING_IS_COLUMN_IN_MASK(2, CT.SYS_CHANGE_COLUMNS) CC FROM CHANGETABLE(CHANGES testTbl,@Last_sync_version) CT WHERE CT.testID = 1 AND CHANGE_TRACKING_IS_COLUMN_IN_MASK(2,CT.SYS_CHANGE_COLUMNS)=1) RAISERROR(N'The column VAL was changed', 10, 1); RAISERROR(N'An update conflict has occurred', 10, 1); END COMMIT TRANSACTION

Change Tracking fits into Microsoft's "Connect to your data from any device" theme for SQL Server 2008, which is made up of the Microsoft Sync Framework, Sync Services for ADO.Net, SQL Server Compact Edition 3.5. It provides for conflict detection and easy data retrieval of changes with minimum performance impact, and automatic setting for data retention. There are several advantages, such as no schema changes on the tracked tables (no need for a timestamp column), security is at the table level and prevention of loopbacks is easy.

As always, please leave your comments or send email to sql.slinger@gmail.com. I'm always interested in your feedback.

Until next time, happy slinging.