SQL Server 2008 includes several enhancements geared toward improving productivity and customer satisfaction. Some of the enhancements have been long sought after by the SQL Server database developer and administrator.

IntelliSense!

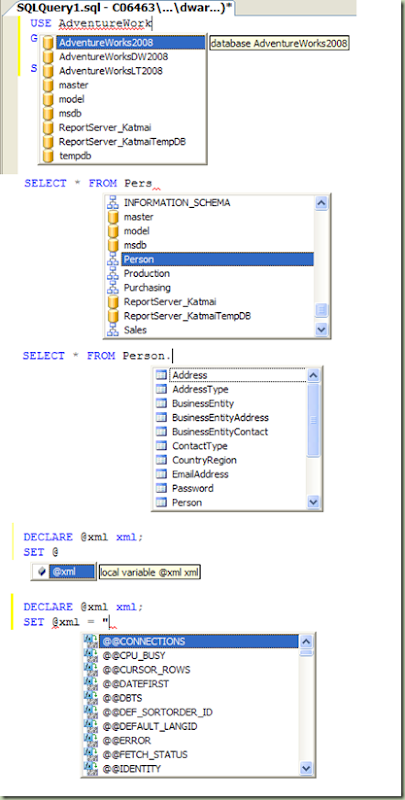

Yes we have long awaited the ability to write T-SQL code with IntelliSense helping code faster. IntelliSense with SQL 08 provides word completion and displays parameter information for functions and stored procs. In the XML editor, IntelliSense can completely show an element declaration. It also indicates errors with red squiggly lines and references them in the Error List window, so you can quickly navigate to the error line by double-clicking the entry. IntelliSense is provided in the Query editor as well as the XML editor, and supports most, but not all, T-SQL syntax. Below are some screen-shots of IntelliSense at work.

Collapsible Regions

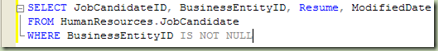

Similar functionality is now provided in the SMS08 Query Editor to regions delimited by BEGIN...END blocks and multi-line statements, such as SELECT statements that span two or more lines. This is similar to what Visual Studio has offered for a few releases now. Here's an example (note the '-' sign on the left, click to collapse text):

Delimiter Matching

Also similar to Visual Studio, SQL Server 2008 Management Studio now offers delimiter matching and highlighting. When you finish typing the second delimiter in a pair, the editor will highlight both the delimiters, or you can press CTRL + ] to jump to the matching delimiter when you are on one of them. This will help you keep track of parenthesis' and nested blocks. Automatic delimiting will recognize these delimiters;

(...), BEGIN...END, BEGIN...END TRY, BEGIN...END CATCH. Brackets and quotes are not recognized for delimiter highlighting.

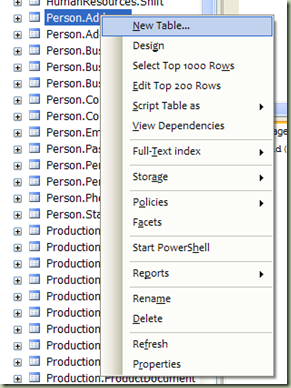

SMS Object Explorer

More context menu choices have been provided for your right-click menu in Object Explorer. These choice include options for changing table design, to opening the table with a certain number of returning records, to getting some of the new reports available. Options for partitioning (Storage), Policies, and Indexing are provided as well.

You will notice a new "Start PowerShell" option. Look for a future post to cover the new Windows PowerShell integration.

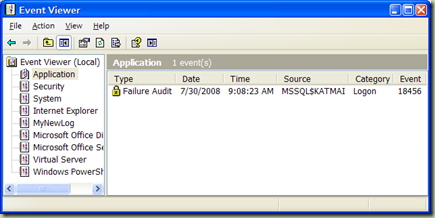

Integrated T-SQL Debugging

Debugging has now been integrated into the Query Editor! You can set breakpoints, step through code, step into code a particular location, and even set watches up to monitor variable values, locals and the call stack. Woohoo!!!!

Other improvements

Multi-Server queries, allow you to run a query across numerous servers and return the results with the server name prepended.

Launch SQL Profiler directly from Management Studio.

Customizable tabs, allow you to modify the information shown and the layout from the tools\options dialog.

Object Explorer Detail Pane has been improved for better functionality and productivity with navigational improvements, detailed object information pane at the bottom, and integrated object search.

Object Search, allow you to search within a database on a full or partial string match and return objects to the Object Explorer Details pane.

Activity Monitor, you have to see it to appreciate it. Built brand new from scratch, it is based on the Windows Resource Monitor and allows you to see graphs of processor wait time, waiting processes, database I/O and batch requests. Detail grids are provided for Processes, Resource Waits, Data File I/O, and Recent Expensive Queries. It's a vast improvement for the DBA.

Performance Studio, new performance tuning tool that tracks historical performance metrics and stores them using drill-through reports.

Partitioning Setup GUI, at last a way to create and manage table partitions graphically. This is accessed from the context menu for a table under Storage. A wizard will launch allowing you to create or manage partitions.

Service Broker Hooks, new context menu items centralize access to the Service Broker T-SQL templates for messages, contracts, queues, etc. Read only property pages are provided for each of these objects as well.

The improvements in the toolset provided with SQL Server 2008 are vast and far sweeping. There are more for you to discover and the best way is to get your hands on it. You can download SQL Server Express 2008 from Microsoft if you don't have a developers edition license.

Please, as always, post your comments and questions. Until next time, happy slinging.